run spark-submit with -master yarn -deploy-mode cluster.set HADOOP_USER_NAME to override OS user, to avoid “Permission denied: user=xxxx, access=WRITE, inode=”/user/xxxx/.sparkStaging/application_xxxxx”:hdfs:hdfs:drwxr-xr-x” (Assumes ‘simple’/no authentication on cluster).set HADOOP_CONF_DIR to the path where those XML files live.

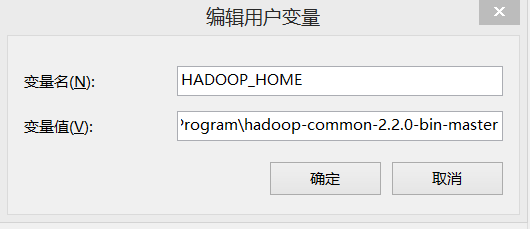

(Assumes the EMR cluster’s security group allows your workstation to connect in the first place.) Set up bare-bones Hadoop config files - only need settings to specify how client connects to cluster.Otherwise it will look like permissions are fixed, but they aren’t Note that it is important to use the 64-bit version of winutils if you are on a 64-bit system.solution: %HADOOP_HOME%\bin\winutils.exe chmod 777 \tmp\hive.Solving permissions on \tmp\hive (“The root scratch dir: /tmp/hive on HDFS should be writable.set HADOOP_HOME environment var to where you put that exe - Spark will look for %HADOOP_HOME%\bin\winutils.exe so make sure you don’t include ‘bin’ in the HADOOP_HOME var!.download this exe and install it somewhere in a ‘bin’ subfolder.Solving the winutils.exe dependency (“Could not locate executable null\bin\winutils.exe”).Windows will struggle with paths that contain spaces, so best to install or link it from somewhere else. Make sure you have appropriate JDK installed and JAVA_HOME environment set properly.First, download the Spark and Hadoop binaries.Getting Spark to run on Windows in general Here are all the issues I had to work through. I managed to get Spark to run on Windows in local mode, and to submit jobs to an EMR cluster in AWS.

0 kommentar(er)

0 kommentar(er)