“ have not caught up with AI’s unique risks or society’s needs.

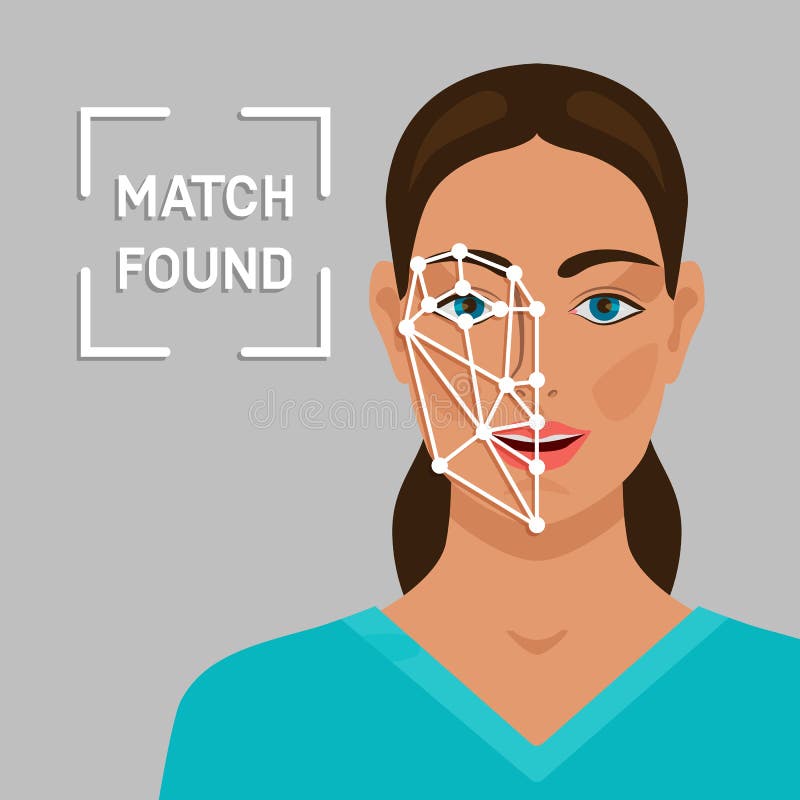

“The potential of AI systems to exacerbate societal biases and inequities is one of the most widely recognized harms associated with these systems,” she continued. Facial recognition has been deemed inappropriate, and Microsoft will retire Azure services that infer “emotional states and identity attributes such as gender, age, smiles, facial hair, hair and makeup,” Crampton said. In a blog post, Microsoft’s chief responsible AI officer Natasha Crampton said the company has recognized that for AI systems to be trustworthy, they must be appropriate solutions for the problems they’re designed to solve. and Facebook’s parent company Meta Platforms Inc. The controversy around facial recognition has been taken seriously by tech firms, with both Amazon Web Services Inc. announced it was considering adding “emotion AI” features, the privacy group Fight for the Future responded by launching a campaign urging it not to do so, over concerns the tech could be misused. Earlier this year, when Zoom Video Communications Inc. In particular, the use of AI tools that can detect a person’s emotions has become especially controversial.

This can lead to big implications when AI is used to identify criminal suspects and in other surveillance situations. Previous studies have demonstrated that the technology is far from perfect, often misidentifying female subjects and those with darker skin at a disproportionate rate.

New users will no longer have access to those features, while existing customers will have to stop using them by the end of the year, Microsoft said.įacial recognition technology has become a major concern for civil rights and privacy groups. To meet these standards, Microsoft has chosen to limit access to the facial recognition tools available through its AzureFace API, Computer Vision and Video Indexer services. The company announced the decision today as it published a 27-page “ Responsible AI Standard” that explains its goals with regard to equitable and trustworthy AI. says it will phase out access to a number of its artificial intelligence-powered facial recognition tools, including a service that’s designed to identify the emotions people exhibit based on videos and images.

0 kommentar(er)

0 kommentar(er)